There are no items in your cart

Add More

Add More

| Item Details | Price | ||

|---|---|---|---|

Mastering the art of finding the optimal boundary between classes.

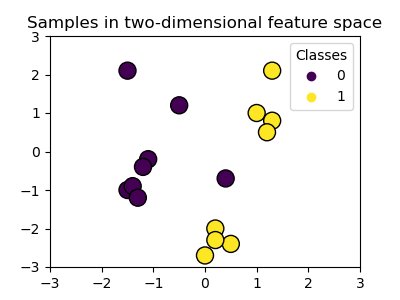

Imagine you have a scatter plot with two different groups of dots (say, blue and green). How would you draw a line to separate them? You could draw many possible lines! But which one is the *best*? Support Vector Machine (SVM) is a powerful machine learning algorithm that tackles exactly this problem, aiming to find the optimal boundary between classes.

SVM is primarily used for Classification tasks (though it can be adapted for Regression), and it's known for its effectiveness, especially in high-dimensional spaces (when you have many input features) and situations where the separation isn't perfectly clean.

Main Technical Concept: SVM is a supervised learning algorithm that finds an optimal hyperplane (a decision boundary) that best separates data points belonging to different classes by maximizing the margin (distance) between the hyperplane and the nearest data points of any class (the support vectors).

Think of the data points for each class as houses in different neighborhoods. SVM tries to draw the widest possible street between the neighborhoods, ensuring the street doesn't touch any houses.

By maximizing the margin, SVM creates a decision boundary that is generally more robust and less sensitive to small variations in the data compared to a boundary that just barely separates the classes.

The "widest street" idea works perfectly if the neighborhoods are clearly separated with empty space between them (linearly separable data). But real data is often messy. SVM evolved to handle this:

Image Credit: Scikit-learn Documentation

When using SVM (especially SVC with kernels), two hyperparameters are crucial for performance:

Choosing appropriate `C` and `gamma` values is essential for getting good SVM performance.

Scikit-learn provides excellent SVM implementations (`SVC` for classification, `SVR` for regression).

(Detailed code examples are available in many tutorials, the focus here is the theory.)

Interview Question

Question 1: What is a "support vector" in the context of SVM, and why are these points important?

Support vectors are the data points from the training set that lie closest to the decision boundary (hyperplane) or within the margin (or even on the wrong side in a soft-margin SVM). They are important because they are the only points that determine the position and orientation of the optimal hyperplane and the margin. Points further away don't influence the boundary.

Question 2: How does the regularization parameter 'C' affect the SVM's decision boundary and its tendency to overfit or underfit?

The 'C' parameter controls the trade-off between maximizing the margin and minimizing classification errors on the training set.

- A low C allows a wider margin and more misclassifications (more tolerance for errors), leading to a simpler model, potentially higher bias, and lower variance (less overfitting, risk of underfitting).

- A high C enforces stricter classification of training points, leading to a narrower margin and fewer misclassifications, potentially resulting in a more complex model, lower bias, and higher variance (risk of overfitting).

Interview Question

Question 3: What is the purpose of using kernels (like RBF or Polynomial) in SVM?

Kernels are used to handle non-linearly separable data. They mathematically transform the original input features into a higher-dimensional space where the data might become linearly separable. SVM then finds a linear hyperplane in this higher-dimensional space. This "kernel trick" allows SVM to create complex, non-linear decision boundaries in the original feature space without explicitly calculating the coordinates in the high-dimensional space, making it computationally efficient.

Question 4: What does the 'gamma' parameter control when using an RBF kernel in SVM?

The 'gamma' parameter defines how much influence a single training example has. A low gamma means a point has far-reaching influence (like a large radius), resulting in a smoother, more general decision boundary. A high gamma means a point has very local influence (like a small radius), leading to a more complex, potentially "wiggly" decision boundary that closely fits the training data, increasing the risk of overfitting.

Interview Question

Question 5: What is the difference between a Maximal Margin Classifier and a Support Vector Classifier (Soft Margin SVM)?

A Maximal Margin Classifier aims to find the hyperplane with the absolute widest margin *only* if the data is perfectly linearly separable. It does not tolerate any points within the margin or misclassified.

A Support Vector Classifier (Soft Margin SVM) is more flexible. It still tries to maximize the margin but allows some data points to violate the margin (be inside it or on the wrong side) to achieve better generalization, especially when data is not perfectly separable or contains outliers. The trade-off is controlled by the C parameter.

Question 6: Why is choosing the right kernel and tuning parameters like C and gamma important for SVM performance?

The choice of kernel determines the type of decision boundary the SVM can create (linear, polynomial, complex RBF). Using the wrong kernel for the data's structure will lead to poor performance. Similarly, the C and gamma parameters control the bias-variance trade-off. Incorrect values can easily lead to significant underfitting (too simple boundary, high bias) or overfitting (too complex boundary fitting noise, high variance). Proper tuning using techniques like cross-validation is essential to find the combination that generalizes best to unseen data.

Interview Question

Question 7: What is a potential drawback of using a very high value for the 'C' parameter in SVM?

A potential drawback of using a very high 'C' value is increased risk of overfitting. A high C forces the model to classify training points correctly, leading to a narrower margin and a more complex decision boundary that might be fitting noise in the training data. This can result in poor performance on new, unseen data.

Learn how to prepare for data science interviews with real questions, no shortcuts or fake promises.

See What’s Inside